|

QUALITATIVE AND QUANTITATIVE RESULTS |

GLOBAL ILLUMINATION

Global illumination is a general name for a group of algorithms used in 3D computer graphics that are meant to add more realistic lighting to 3D scenes. Such algorithms take into account not only the light which comes directly from a light source (direct illumination), but also subsequent cases in which light rays from the same source are reflected by other surfaces in the scene (indirect illumination). Images rendered using global illumination algorithms often appear more photorealistic than images rendered using only direct illumination algorithms. Radiosity, ray tracing, beam tracing, cone tracing, path tracing, metropolis light transport, ambient occlusion, photon mapping, and image based lighting are examples of algorithms used in global illumination, some of which may be used together to yield results that are fast, but accurate [1].

The goal is to compute all possible light interactions in a given scene, and thus obtain a truely photorealistic image. All combinations of diffuse and specular reflections and transmissions must be accounted for. Effects such as color bleeding and caustics must be included in a global illumination simulation [2].

Radiosity

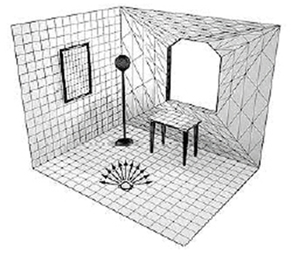

Radiosity calculates the light intensity for discrete points in the environments by dividing the original surfaces into a mesh of smaller surfaces [3].

Radiosity is the general name for a category of lighting simulation algorithms that entails subdividing the scene into discrete patches and subsequently determining a condition in which the light transfer between the patches constitutes an energetic equilibrium. This procedure is easiest for materials that are uniform diffusers. Consequently, the drawback of this approach, as opposed to its counterpart, ray tracing, is that it is difficult to include the behavior of specular materials. The great advantage, however, is that it is an intrinsically more efficient method than ray tracing for computing the overall light distribution in a scene [4].

Ray-tracing

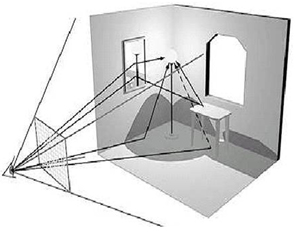

Raytracing works by tracing rays backward from each pixel on the screen into the 3D model to derive the light intensity [5].

Ray Tracing is a global illumination based rendering method. It traces rays of light from the eye back through the image plane into the scene. Then the rays are tested against all objects in the scene to determine if they intersect any objects. If the ray misses all objects, then that pixel is shaded the background color. Ray tracing handles shadows, multiple specular reflections, and texture mapping in a very easy straight-forward manner [6].

Photon mapping

The creation of photorealistic images of three-dimensional models is central to computer graphics. Photon mapping, an extension of ray tracing, makes it possible to efficiently simulate global illumination in complex scenes. Photon mapping can simulate caustics (focused light, like shimmering waves at the bottom of a swimming pool), diffuse inter-reflections (e.g., the "bleeding" of colored light from a red wall onto a white floor, giving the floor a reddish tint), and participating media (such as clouds or smoke) [7].

With photon mapping, light packets called photons are sent out into the scene from the light source. Whenever a photon intersects with a surface, the intersection point, incoming direction, and energy of the photon are stored in a cache called the photon map. After intersecting the surface, a new direction for the photon is selected using the surface's bidirectional reflectance distribution function (BRDF). There are two methods for determining when a photon should stop bouncing.

- The first is to decrease the energy of the photon at each intersection point. Using this method, when a photon's energy reaches a predetermined low-energy threshold, the bouncing stops.

- The second method uses a Monte Carlo method technique called Russian roulette. In this method, at each intersection the photon has a certain likelihood of continuing to bounce with the scene, and that likelihood decreases with each bounce.

Photon mapping is generally a preprocess and is carried out before the main rendering of the image. Often the photon map is stored on disk for later use. Once the actual rendering has started, each intersection of an object and a ray is tested to see if it is within a certain range of one or more stored photons, and if so, the energy of the photons is added to the energy calculated using a standard illumination equation. The slowest part of the algorithm is searching the photon map for the nearest photons to the point being illuminated. Photon mapping was designed to work primarily with ray tracers [8].

References